Build your AI infrastructure for scale

From silicon to software. From development to production. Get all the open source components you need to build your AI infrastructure. Go to market quickly with an integrated end-to-end stack and start extracting value from your data at scale.

Why Canonical for enterprise

AI infrastructure?

- Deep hardware enablement optimized for AI performance

- Cost-effective model with one subscription for the entire stack

- Run your workloads anywhere – on premises, in public clouds, or hybrid environments

- 100% open source with enterprise support

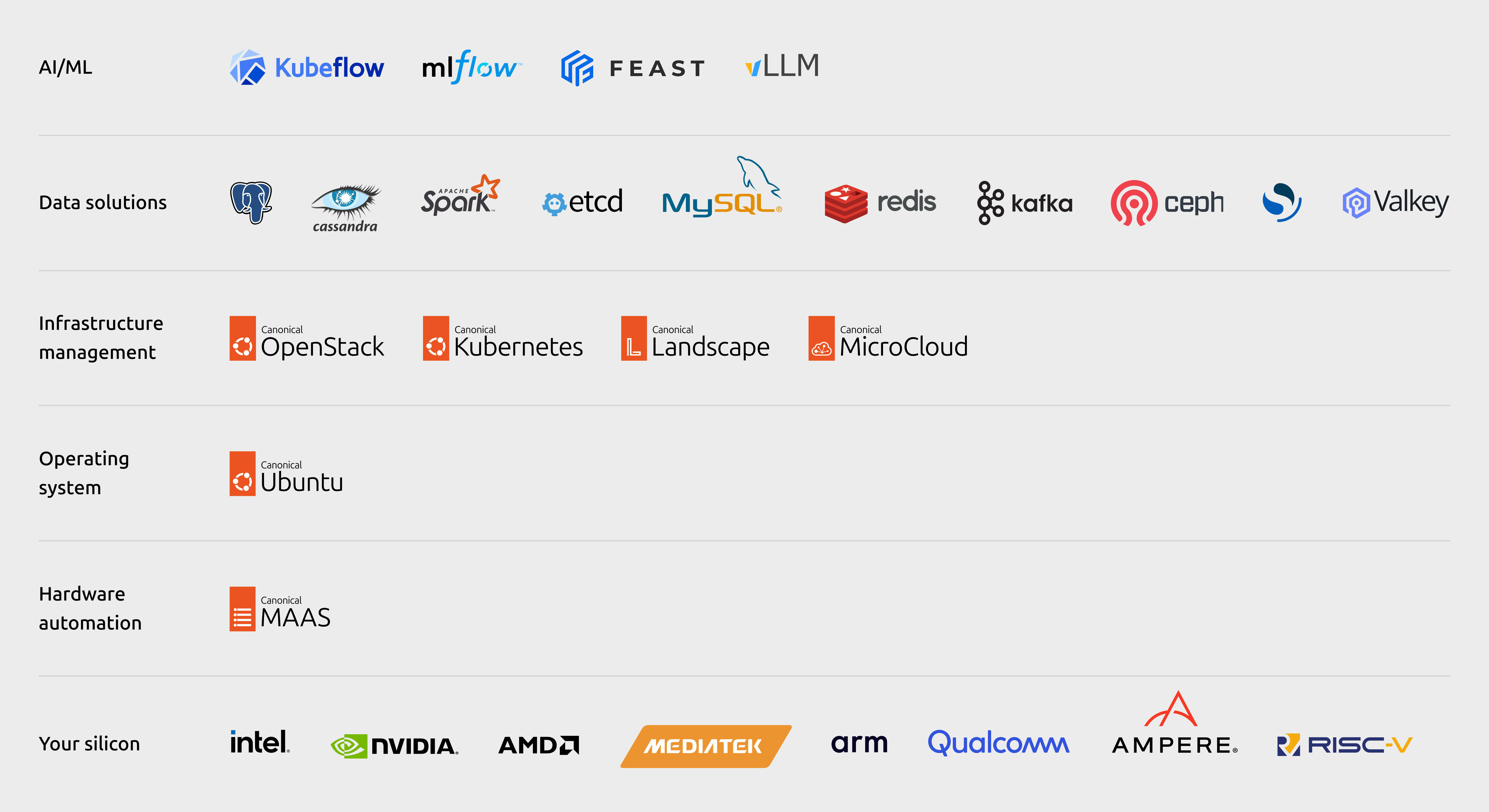

Full-stack AI infrastructure

Hardware automation

Automate the provisioning and management of your data center hardware, including the GPUs, SmartNICs, and DPUs you need to drive your AI workloads.

Operating system

Ubuntu is the operating system of choice for AI. Get access to the broadest ecosystem of machine learning tools and libraries, and diversify your silicon supply chain for resiliency with an OS optimized for all the leading architectures.

Infrastructure management

Build your cloud, modernize your workloads for AI, manage your entire Ubuntu estate. Enjoy bottom-up infrastructure automation so you can focus on your business.

Data solutions

There's no AI without data. Canonical delivers a comprehensive portfolio of integrated open source solutions for database management, data processing, search, and caching.

MLOps

Our modular MLOps platform equips you with everything you need to bring your models all the way from experimentation to production.

Optimized for leading silicons

Solve the challenge of silicon enablement with AI infrastructure solutions that run optimally across all major architectures.

Tested and certified with the world's largest hardware vendors

Accelerate time-to-value with validated hardware.

Private, public, sovereign, or hybrid

Choose the ideal AI infrastructure for your use cases. For instance, start quickly with no risk and low investment on public clouds, then migrate your workloads to your private cloud as you scale.

With Canonical's solutions, you can run your workloads anywhere, including sovereign, hybrid, and multi-cloud environments.

Trusted across clouds

Get started easily on your cloud of choice with optimized and certified Ubuntu images.

Kubernetes optimized for AI

Doing more with AI means modernizing your workloads. Modernized workloads mean containerization – and containers need Kubernetes.

Canonical Kubernetes is optimized to enhance AI/ML performance, incorporating features that amplify processing power and reduce latency, developed and integrated in a tight collaboration with NVIDIA.

AI infrastructure in the real world, powered by Canonical

Lowering the cost and complexity of critical space missions

We wanted one partner for the whole on-premise cloud because we’re not just supporting Kubernetes but also our Ceph clusters, managed Postgres, Kafka, and AI tools such as Kubeflow and Spark. These were all the services that were needed and with this we could have one nice, easy joined-up approach.

Michael Hawkshaw, IT Service Manager, European Space Agency

Fortune 500 fintech company goes from constant troubleshooting to scalable machine learning

We like the whole integration umbrella that Canonical offers with Canonical Kubeflow. We have easy access to popular ML frameworks like TensorFlow, PyTorch, and XGBoost, as well as the native Kubernetes tools for monitoring and logging. It helps our data scientists quickly build and experiment with their models in an efficient manner.

Senior data scientist, Fortune 500 financial services company

Building a sustainable cloud for AI workloads

The level of engagement from the Canonical team was remarkable. Even before we entered into a commercial agreement, Canonical offered valuable advice around OEMs and hardware choices. They were dedicated to our project from the get-go.

Tim Rosenfield, CEO and Co-Founder, Firmus

Machine learning drives down operational costs

Partnering with Canonical lets us concentrate on our core business. Our data scientists can focus on data manipulation and model training rather than managing infrastructure.

Machine learning engineer, entertainment technology company

Cost-effective, security-maintained, and supported

Canonical maintains and supports all the open source tooling in your AI infrastructure stack, including Kubernetes and open source cloud management solutions like OpenStack.

Enterprise support for the entire stack is available with a transparent subscription, Ubuntu Pro, so you have full control over your TCO.

Fast-track compliance and run AI projects securely. Let us handle the CVEs.

Start your journey with a workshop

Feeling stuck? Our consulting services and workshop can help you move forward.

Our experts will work with you to define an optimal architecture tailored to your existing ecosystem and implement a scalable, resilient infrastructure across any environment – private, public, or hybrid cloud.