Michael Iatrou

on 12 October 2017

Kubernetes the not so easy way

A few years ago, the simplest method to deploy and operate Kubernetes on Ubuntu was with conjure-up. Whether the substrate is a public cloud (AWS, Azure, GCP, etc) private virtualized environments (VMware) or bare metal, conjure-up will allow you to quickly install a fully functional, production-grade Kubernetes.

But what if you wanted to delve a bit more into the details of the process? What if you wanted to use directly the core tools of the conjure-up apparatus?

Here is the task at hand: deploy Kubernetes on a bare metal server. The control plane needs to be containerized and retain the same characteristics as a production environment (observability, scalability, upgradability, etc). The worker nodes real estate needs to be elastic, allowing to add/remove nodes on demand, without disruption of the existing services. Extra points for sane networking.

You will need a machine equipped with at least 4 CPU cores, 16GB RAM,100GB free disk space, preferably SSD and one NIC. As I am writing this, I am using MAAS to deploy Ubuntu 16.04.3 on such a machine. I have also configured a Linux bridge (br0) and have attached the NIC (eth0) to it, using MAAS’ network configuration capabilities. Moreover, MAAS will serve as DHCP server and DNS.

We will be using machine containers (LXD) since they provide virtual machine operations semantics, and bare metal performance. We will also leverage Juju and the Charmed Kubernetes bundle — yes, the same bundle that we use for production deployments on public cloud and bare-metal.

Let’s SSH into our freshly deployed Xenial, using user ubuntu and update the critical components, LXD and Juju, to their latest stable versions:

$ sudo add-apt-repository ppa:juju/stable -y $ sudo add-apt-repository ppa:ubuntu-lxc/lxd-stable -y $ sudo apt update $ sudo apt dist-upgrade -y $ sudo apt install lxd juju-2.0 -y

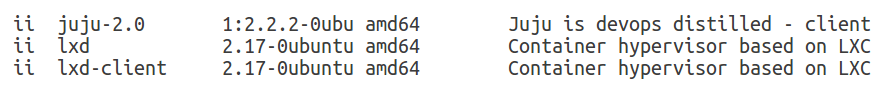

Here are the versions of our tools:

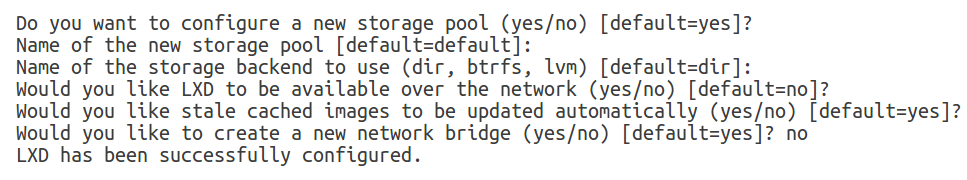

We can now initialize LXD:

We have skipped the creation of a new network bridge, because we want our LXD machine containers to use the existing bridge (br0). We are modifying the default LXD profile accordingly:

$ lxc network attach-profile br0 default eth0

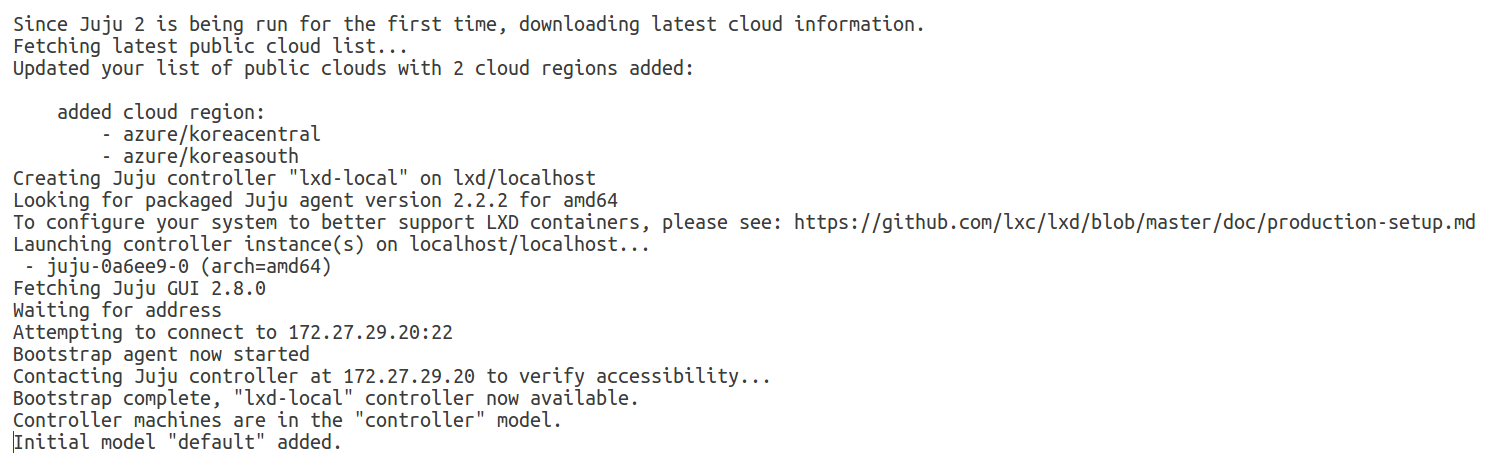

We are now ready to bootstrap our local Juju controller:

$ juju bootstrap lxd lxd-local

The juju controller is now instantiated! As part of the process, two new LXD profiles have been created:

$ lxc profile list +-----------------+---------+ | NAME | USED BY | +-----------------+---------+ | default | 0 | +-----------------+---------+ | juju-controller | 1 | +-----------------+---------+ | juju-default | 0 | +-----------------+---------+

Let’s create a new model for our k8s deployment:

$ juju add-model kubernetes $ juju models Controller: lxd-local Model Cloud/Region Status Machines Cores Access Last connection controller localhost/localhost available 1 - admin just now default localhost/localhost available 0 - admin just now kubernetes* localhost/localhost available 0 - admin never connected

Juju will automatically switch the active model to “kubernetes”. It will also create a new LXD profile, associated with this model:

$ lxc profile list

+-----------------+---------+

| NAME | USED BY |

+-----------------+---------+

| default | 0 |

+-----------------+---------+

| juju-controller | 1 |

+-----------------+---------+

| juju-default | 0 |

+-----------------+---------+

| juju-kubernetes | 0 |

+-----------------+---------+

So, Juju not only provides isolation through models, but ensures that if a model requires customized LXD containers, no other existing or future LXD profiles will be affected.

For Kubernetes, we will customize the juju-kubernetes profile to enable privileged machine containers and add an SSH key to it. Create a new YAML file juju-lxd-profile.yaml with the following configuration:

name: juju-kubernetes config: user.user-data: | #cloud-config ssh_authorized_keys: - @@SSHPUB@@ boot.autostart: "true" linux.kernel_modules: ip_tables,ip6_tables,netlink_diag,nf_nat,overlay raw.lxc: | lxc.aa_profile=unconfined lxc.mount.auto=proc:rw sys:rw lxc.cap.drop= security.nesting: "true" security.privileged: "true" description: "" devices: aadisable: path: /sys/module/nf_conntrack/parameters/hashsize source: /dev/null type: disk aadisable1: path: /sys/module/apparmor/parameters/enabled source: /dev/null type: disk

Make sure that you have generated an SSH key pair for user “ubuntu”, before you execute the following one-liner:

$ sed -ri "s'@@SSHPUB@@'$(cat ~/.ssh/id_rsa.pub)'" juju-lxd-profile.yaml

Then update the juju-kubernetes LXD profile:

$ lxc profile edit "juju-kubernetes" < juju-lxd-profile.yaml

Final step, deploy Kubernetes already!

$ juju deploy canonical-kubernetes-101

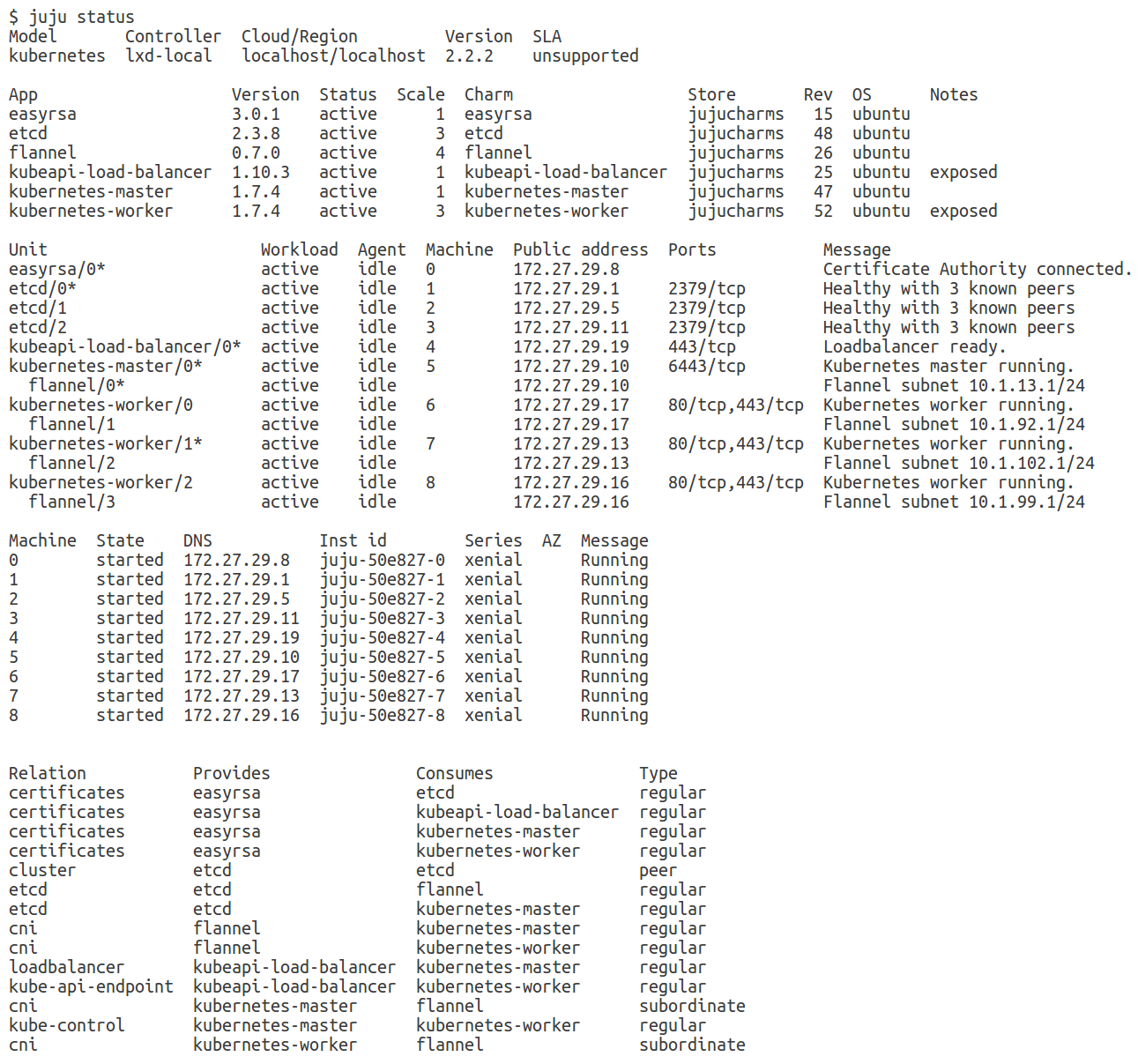

You’ve noticed that I use version 101 of the canonical-kubernetes bundle. I could have as well omitted the version number and allow Juju to automatically get the latest available version. It’s going to take only a few minutes (or more, if you don’t have that SSD I mentioned earlier), before everything is successfully deployed:

All done, let’s start exploring! We need kubectl and the “admin” credentials to interact with the cluster: Install the former as a snap and copy the k8s config using juju:

$ sudo snap install kubectl --classic kubectl 1.7.4 from 'canonical' installed $ mkdir -p ~/.kube $ juju scp kubernetes-master/0:config ~/.kube/config

For the k8s UI experience, get the URL and credentials using:

$ kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: REDACTED

server: https://172.27.29.19:443>

name: juju-cluster

contexts:

- context:

cluster: juju-cluster

user: admin

name: juju-context

current-context: juju-context

kind: Config

preferences: {}

users

- name:

user:

password: shannonWouldBeProud

username: admin

We now have a fully operational kubernetes cluster, on bare-metal, with bridged networking, not very different from what conjure-up deploys. Most importantly, we got a glimpse of how Juju and LXD are used behind the scenes. Of course conjure-up offers much more functionality and evolves quickly: its upcoming release adds support for Helm and Deis… The joy is in the journey, but keep moving fast.

Learn more about Charmed Kubernetes or reach out to us about your Kubernetes challenges and use cases.