Andreea Munteanu

on 1 November 2024

Why should you use an official distribution of Kubeflow?

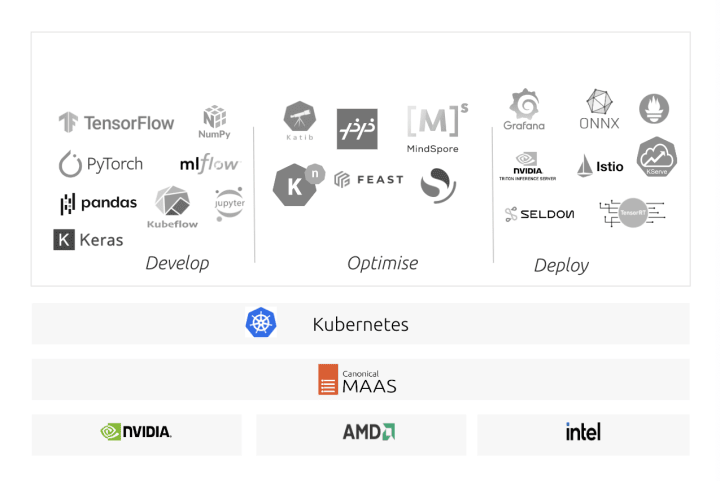

Kubeflow is an open source MLOps platform that is designed to enable organizations to scale their ML initiatives and automate their workloads. It is a cloud-native solution that helps developers run the entire machine learning lifecycle within a single solution on Kubernetes. It can be used to develop, optimize and deploy models.

This blog will walk you through the benefits of using an official distribution of the Kubeflow project. It will include key considerations, benefits, the differences from the upstream project and how to get started with one of them. We will focus on Charmed Kubeflow, Canonical’s distribution of Kubeflow.

A Brief History of Kubeflow

Kubeflow is a project that Google started in 2018, and shortly after, organizations such as Amazon, Intel, Bloomberg and Apple got involved into the project. It is organized into working groups that focus on the development of different components. Canonical was one of the initial contributors. We’ve been involved since the early days, leading multiple releases and two of the working groups.

Kubeflow’s philosophy was to bring together and integrate existing tooling on the market, such as Jupyter Notebook (known nowadays as Kubeflow Notebooks). From the outset, Kubeflow founders saw one of the biggest challenges of the ML landscape, tooling fragmentation, and tried to address it. There was also a gap in the market due to the lack of any tooling to automate ML workloads, so the Kubeflow project introduced Kubeflow Pipelines for this purpose.

The very first release, Kubeflow 0.7 arrived in October 2019. At the moment, there are two releases every year that bring together new enhancements and integrations to continue addressing the latest challenges from the AI landscape and enable developers from all industries to innovate. The release lead ensures that all working groups have a roadmap and that features are delivered according to the timeline.

Nowadays, Kubeflow is part of the Cloud Native Computing (CNCF) Foundation, as an incubator project. You can join the community and start contributing right away!

What is Charmed Kubeflow?

Charmed Kubeflow (CKF) is an official distribution of Kubeflow, created by Canonical. It delivers all the enhancements that come to the upstream project, but goes beyond to provide an enterprise-grade MLOps platform. It is designed to streamline operations, secure the packages and containers images, and gives users the option of enterprise support and managed services.

Canonical follows closely the release cycle of the upstream project, with Charmed Kubeflow being available at the same time. Additionally, at least two weeks before the release, we run a beta program, where AI practitioners can try out the latest features from Kubeflow, provide feedback and contribute to the project. Here is the livestream from the latest release, Kubeflow 1.9, where our team talked about the latest enhancements.

Why use an official distribution of Kubeflow?

Kubeflow is a fully open source project, which lowers the barrier to entry and enables organizations to quickly get started and validate if the platform meets their requirements. Official distributions, such as Charmed Kubeflow, provide additional functionality to make the platform suitable and easy-to-use at scale and in production. Here are the top reasons to consider using an official distribution:

Security maintenance

Kubeflow has over 100 container images that come from different sources, run on different operating systems and are secured to a different level. Whenever organizations want to run their ML projects in production, they need to utilize secure tooling to protect their data and artifacts. Therefore, utilizing a Kubeflow distribution that provides patching for the vulnerabilities from the container images not only protects organizations from security risks but also enables them to move beyond experimentation with their projects.

Streamlined operations

Kubeflow is still difficult to operate, with simple tasks such as upgrades being challenging. Charmed Kubeflow uses a different packaging method, helping MLOps engineers to more easily manage the platform. Tasks such as tooling integrations or upgrades can be performed with just a few commands. What’s more, Charmed Kubeflow benefits from enhancements such as automated profile creation and simpler user management, which are not available yet in the upstream project.

Tooling integration

Kubeflow enables AI practitioners to run the entire machine-learning lifecycle within one tool. However, organizations are likely already using tools for different capabilities, such as Spark for data streaming, different identity providers for user management or vector databases for Retrieval Augmented Generation (RAG) use cases.

sOfficial distributions integrate the MLOps platform with these additional tools, such that organisations can start scaling their ML initiatives without having to change their entire infrastructure. It includes integrations with tools such as Grafana, Prometheus and Loki for observability, which are foundational when running ML in production.

Hardware validation

As the market evolves, there is a growing number of hardware types that can be used to develop and deploy models. From different architectures, such as x86, ARM or RISC-V, to various vendors such as NVIDIA, Intel or AMD, organizations are building their AI infrastructure keeping in mind the computing power needed. Having an MLOps platform that is already tested and validated on this hardware will accelerate project delivery and minimize the burden on solution architects.

Canonical is collaborating with the major silicon vendors to ensure that our portfolio is optimized for ML workloads. From having the necessary drivers and operators, such as GPU operators, already available in Ubuntu, to enabling Charmed Kubeflow on a wide range of hardware types, we aim to offer our customers and partners a solution that is validated. For example, Charmed Kubeflow is amongst one of the few MLOps platforms that are certified for NVIDIA DGX Systems, one of the leading AI hardware types available on the market.

Hybrid and multi-cloud scenario

Open source platforms such as Kubeflow are often preferred due to the portability that it has amongst different environments. Charmed Kubeflow augments these capabilities, enabling organizations to not only use the same tooling on public cloud and on-prem, but going further and supporting hybrid and multi cloud scenarios as well. Since it is a cloud native application that is validated on a wide-range of Kubernetes distributions – including AKS, EKS, GKE and Canonical Kubernetes – Charmed Kubeflow also gives enterprises further freedom when they design AI architecture, enabling them to continue using their existing infrastructure while having the option to migrate as their ML projects mature.

Enterprise support

Kubeflow can be used by anyone, yet the upstream project only gets help from the community. The lack of enterprise support is often a blocker for organizations that are looking to use the MLOps platform. As the company behind Charmed Kubeflow, Canonical provides 24/7 support, timely bug fixing, as well as…

Managed services

Managing an MLOps platform such as Kubeflow can often be challenging, because of both lack of tooling and skills that organizations face. Canonical provides managed services for Charmed Kubeflow, helping organizations focus on their ML projects rather than operating the platform. We take full responsibility for managing the platform, including 24/7 monitoring, upgrades and bug fixes. Our engineers work closely with our customers to ensure a smooth experience where operations are no longer a challenge.

[Read more about one of our customers, who has been using our platform with managed services]

Customer feedback and enhancements

Open source welcomes contributions from anyone, but oftentimes it might take some time until new features are being developed by the community. As part of our commercial engagements, we work closely with our customers to understand where there are gaps in the product, taking their feedback into account when building our roadmaps. This often leads to enhancements being developed faster, bugs being fixed more quickly, and further integrations being added to our portfolio.

End-to-end solution

Organizations are often not looking only for an MLOps platform, but rather for an end-to-end solution for their AI infrastructure. Identifying the right tooling and sticking it together tends to be time-consuming and ineffective, causing delays in project delivery and higher costs than expected. Canonical’s capabilities go beyond the MLOps platform, providing an end-to-end solution for AI infrastructure.

Using an official distribution like Charmed Kubeflow means having a partner that prioritizes the performance and security of the MLOps platform, and puts your specific needs first. It means getting your projects to production faster, having access to new capabilities sooner, and freeing up more time to focus on building your models.